The process

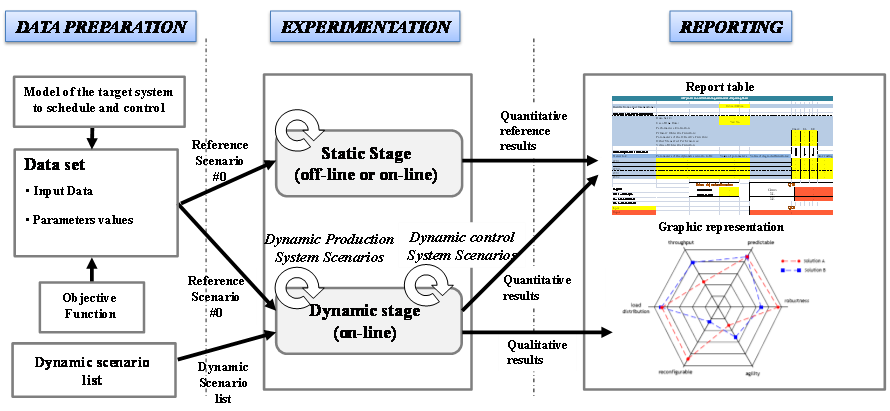

Three consecutive steps compose the proposed benchmarking process, they are presented in below.

Data preparation

It concerns the sizing and the parameterization of the case study. Given a generic model of the target system to schedule and control, the first benchmarking decision is to choose the “data set”. A data set includes usually an instance of a model of the target system on which the benchmark is applied,accompanied with the input data needed to make this model works.Once this data set is chosen, the second decision concerns the definition of the objective function. The couple (data set, objective function) defines the reference scenario, called scenario #0. The third decision to make in this step is to decide whether or not dynamic behaviour should be tested. If yes, then the fourth and last decision is that the researchers must decide which dynamic scenarios they are willing to test in a list of dynamic scenarios.

Once defined, scenario #0 contains only static data (i.e., all the data is known at the initial date), which allows researchers to test deterministic optimization mechanisms for a given set of inputs (e.g., OR approaches, simulation or emergent approaches, multi-agents approaches), especially if only few constraints are relaxed. In this stage, performance measures are purely quantitative. Scenario #0 can be used to test different optimization approaches, to evaluate the improvement of certain criteria (e.g., Cmax values), or to check the basic behavior of a control system in real time where all data are known initially.

Experimentation

It is composed of two kinds of experiments:

- the static stage, which concerns the treatment of the reference scenario (i.e., scenario #0), and

- the dynamic stage, which concerns the treatment of the dynamic scenarios.

If the researchers had selected the second option in the previous step, they will execute two types of dynamic scenarios, introducing perturbations 1) on the target system and 2) on the control system itself. In this stage, researchers can test control approaches and algorithms, using the scenario #0 into which some dynamic events are inserted, which defines several other scenarios with increasing complexity.

The output of the static stage concerns only quantitative data, while performance measures can be both quantitative (e.g., relative degradation of performance indicators) or qualitative (e.g., robustness level) in the dynamic stage. It is important to note that only the static stage is compulsory in the research; the dynamic stage is optional. However, if researchers need to quantify certain dynamic features of their control system, it is necessary for the static stage to be performed before the dynamic stage.Researchers could then design solutions that dissociate or integrate both static and dynamic stages, leading to designing coupling static optimization with dynamic behavior (e.g, proactive, reactive, predictive-reactive scheduling methods, the interested reader can consult Davenport and Beck (2000), who did a widespread literature survey for scheduling under uncertainty.)

Reporting

It concerns the reporting of the experimental results in a standardized way:

- The parameters and the quantitative results from the static and dynamic stages are summarized in a report table, which facilitates future comparisons and characterization of the approaches, and

- The qualitative results obtained using the dynamic scenarios are presented via graphic representations.

This benchmark has been generically designed: it can be applied to different target systems, typically production systems, but not only (e.g., healthcare systems). In this paper, this benchmark is applied on the flexible job-shop scheduling problem,whose model is inspired from an existing flexible cell.